So...they say AI is going to replace you...

Software Developers

Who are you…‽

Social media resonate the “message” of “programmers’ demise” as of the emergence of “AI” and its high promises. With much hype and popular nonsense-pumping, seldom will we notice any professional reasoning or actual introspection into what, exactly, they are all talking about…

So…they say software developers are to be replaced by machines…well…

The reader involved with the domain of software development1 will be sensitive to the definition—and interpretation—of the actual role discussed.

Let’s first differentiate between two terms:

Programmers write, debug, test, and troubleshoot code in various programming languages2 to ensure software functions properly. They interpret instructions from developers or engineers, focusing on technical tasks and coding, with deep expertise in coding and troubleshooting.

Software Engineer / Software Developer manage the entire software development lifecycle, including requirements gathering, planning, designing, coding, testing, and deployment. They handle project management tasks like requirements definition, coordination, prototyping, budgeting, and release management, overseeing the product lifecycle from design to updates and cross-team collaboration. They may delegate coding to programmers and work with clients or stakeholders to meet user needs.

Both roles are vital in the creation and maintenance of software: Programmers focus more on code, while software engineers shape the overall development process and project direction3.

Are we really surprised…‽

Within the last four decades we have witnessed the ease and accessibility of software development tools and styles4; the software development industry made much effort in simplifying, hastening, and overall bettering the process and expediting it.

In the 1980s we saw the birth of Fourth-Generation Languages (4GLs)—a major leap forward from traditional programming languages like C, Pascal, and COBOL. Their primary goal was to improve developer productivity for business applications, especially those heavily reliant on databases.

These offered the rapid generation of forms, reports, and data entry screens through high-level, English-like syntax that allowed developers to specify what they wanted to achieve rather than how to do it5.

Such tools were offered to professional developers building data-intensive business applications.

The promise was huge: No need to write any code!The 1990s brought Visual Programming and Rapid Application Development (RAD), as graphical user interfaces (GUIs) became the standard, and application generators evolved to a more visual, "point-and-click" approach.

These tools offered drag-and-drop components, visual form designers, and event-driven programming, making it easier to create the user interface and connect it to the underlying business logic6.

Still—these were primarily used by professional developers and advanced power users; whereas many ‘simple’ applications might have required minimal effort within the toolbox, more complex scenarios, demanding customer requirements, change management, and application evolution had demanded more than ‘drag-and-drop.’

Often, they required actual coding (oh…dear…).In the 2000s , with the widespread adoption of the internet, application generators had to adapt to web development, so—the industry had established the core principles of Low-Code/No-Code (LCNC), aiming at democratizing software development.

The focus now was automating business processes and creating simple web and mobile applications using Cloud-based platforms and visual modeling tools that let users connect data sources, design user flows, and automate tasks without writing code. This was a key step toward enabling "citizen developers" and business analysts, not just technical professionals, the creation of ‘applications’.Since the 2010s we hear much about Generative AI and Conversational Development, considered a/the “game-changer”.

This time the claim is that the rise of Generative AI and large language models (LLMs) had revolutionized how applications are built: The process is no longer just about dragging and dropping: It's about describing your needs in natural language.

The promise is, that we may be able to build complex, full-stack applications, from the user interface and code to the database structure, through natural language prompts (=based on a simple text description). AI assistants will help with code completion, bug detection, and even writing tests.

All this magnificence will be available for both developers and non-technical users: AI serving as a co-pilot to accelerate the entire development cycle.

Through the ages, code and application developers did not create all and every piece from scratch: Software professionals have been using pseudo-code references for years, relied on software packages7 to save time and errors, and allow for better focus on the goal at hand.

The better developers knew, that many cases have a “textbook” solution, e.g., Operations Research problems; it would have been a waste of time to reinvent wheels that have been already tested for decades8…

In this time, practices changed in many ways, and so also did the ‘role interpretation’ of both software people and their firms. Hype and industry reverberation had heralded “programmers” as modern-day gold diggers—for better and for…less…: The industry was “pumping” and trumpeting the bottomless need for “developers,” exciting an enormous growth of “keyboard technicians” generation channels: Schools, online courses, literature, informal or less formal educational institutions, self-made freelancers, etc.

One shouldn’t have to pass the tedious (and expensive!) trail of formal, academic software engineering education; such people would have cost less (!), perhaps—be less of a headache in a team (‘real’ software engineers will lead them and tell them what to do, and if they suck—there are [so] many more out there…!).

The industry relied on the “formal”, well-educated professionals, and was quite lenient with lower-level ‘programmers’9.

So...they say AI is going to replace you...

IT social and professional media are known for their hyperventilated self-promotion.

It can be anything—from a grand solution10 to a grave disaster11.

When it comes to big investments and perceived market-share battles—things grow hyperbole.

Now—it’s about what seems to be a profound shift in software development, driven by rapid advances in AI.

No one will mention (or hint) the fact, that AI had seen two “winters” already12, and that current trends and mechanisms are already being studied and recognized as having quite a few shortcomings and…well…issues…

The current claim is, that traditional coding is increasingly being replaced by natural language design documents, which are then implemented by AI tools.

I suggest referring to the previous post in re…

Assuming the claim is actual—it is further argued that the developer role pivots from manual coding to defining problems, understanding user needs, and specifying solutions—while AI handles the technical implementation.

They actually say this: We have pumped thousands of keyboard-punchers into the industry, and now we understand, that what they are doing is “mechanical” and can be done by a piece of code...

They will not admit, that they had wasted loads of money and lost much time in creating inferior solutions by lower-paid, less-professional, limited-scope and oppressed technicians; they will now hold on to another magic wand or holy grail or something that will adorn their name amongst other gold rushers, and—will fire all those redundant (?) people…

But they say…

Current hype promotes the transition from manual coding to AI Implementation: AI tools will execute much of the code generation based on plain English specifications, making traditional programming skills less central to the job.

Anyone in this industry had already seen more automation, more “friendly” means to carry out complex tasks13, and many had experienced technological leaps.

So—not much surprise here…If the task of “code generation based on plain English specifications” is so simple to drop the human bottleneck—can we shred the Agile Manifesto…?

The “promise” now is, that leading-edge developers may see reduction in coding effort14. They will spend most of their time on tasks reminiscent of product management—such as user research and prioritization.

But—sorry for my nagging—wasn’t this the role ab initio…?

Such claims mean this: Our leading-edge developers have lost their connection to project management, customer context, and delivery criteria, and now—a new software solution will do all this for them.

Right…?The “developer slayers” highlight the following in the Great Big Beautiful Tomorrow15:

AI makes software cloning quick and easy to build and allow software commoditization; success will be with user understanding, distribution, market fit, and branding.

Has this claim not been regurgitated since…OK…remember the term “reusability”?

AI will be the “thing” to compensate for software industry’s user misunderstanding (or ignorance), greedy distribution, draconic market policies, or fashion-like branding…?Now, they say, skills needed will include the ability to accurately discern real user needs (often revealed, not stated), identification of features or products that are truly valuable, distribution, marketing, and user acquisition excellence.

Wait a minute…Hadn’t all those been on the table for almost half a century?

And you think “AI” will…do…this…instead of…what…?

Are we still talking about developers…?“AI” prophets envision inevitable AI-driven automation, which will render manual coding skills soon as niche as traditional crafts replaced by mechanization. ‘Winners’ in this new era will be those who bridge human needs, strategic thinking, and organizational execution, rather than purely technical prowess.

Sorry, but do you think that as industry that had failed to deliver all this for the past four decades can magically be transformed by yet another software solution from the same industry…?Professionals are now urged to pair technical skills with a deep understanding of users, markets, and business models: Communication, user empathy, go-to-market strategies, and the ability to translate human needs into clear specifications will be the most valuable assets in an AI-assisted world.

When were these NOT valuable…?

Are you at risk of being replaced?

Anyone well into contemporary AI mechanisms and having professional integrity will agree [to some extent] with the words of the lady, considered to be the first programmer:

Any “AI” solution is—still, yet, and will be—a software package.

You, the software professional, will understand the following from Mills Baker, Head of Design at Substack16:

I was talking to one of our top engineers (at Substack), one of the brainiest guys we've got, who's deep into this particular scene. And I was asking him about a particular project and what he thought the delta pre-LLM and post-LLM was.

And he was like, before LLMs, it could take 10 months.

After LLMs nine and a half months.

And I was like, okay, well, that's not a huge thing.

And he was like, no, these things don't solve complicated, hard problems in a particular space that well.

What they're really good for is starting from nothing and building stuff that doesn't have to support a lot of weight.

So you'll see these guys who post things like, I coded a thing for my TV in two days. And you're like, yeah, it's not that hard to go from zero to a thing for your TV.

Now try to modify Facebook so that it works in a different way. Well, it doesn't really understand the way Facebook does this. And it doesn't understand how this is set up and I couldn't get it to work right with this.

So it's the particularity, again, just like with creative writing, it's not there. Because they're generalization machines.

﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌

Judging by the past half century with IT and current state of [what’s perceived as “AI”] we may [almost] safely say, that based on a software developer’s value to the firm the risk of being “replaced” by AI is not high.

Here are some points to consider:

Not all firms go berserk over AI (e.g.—Apple).

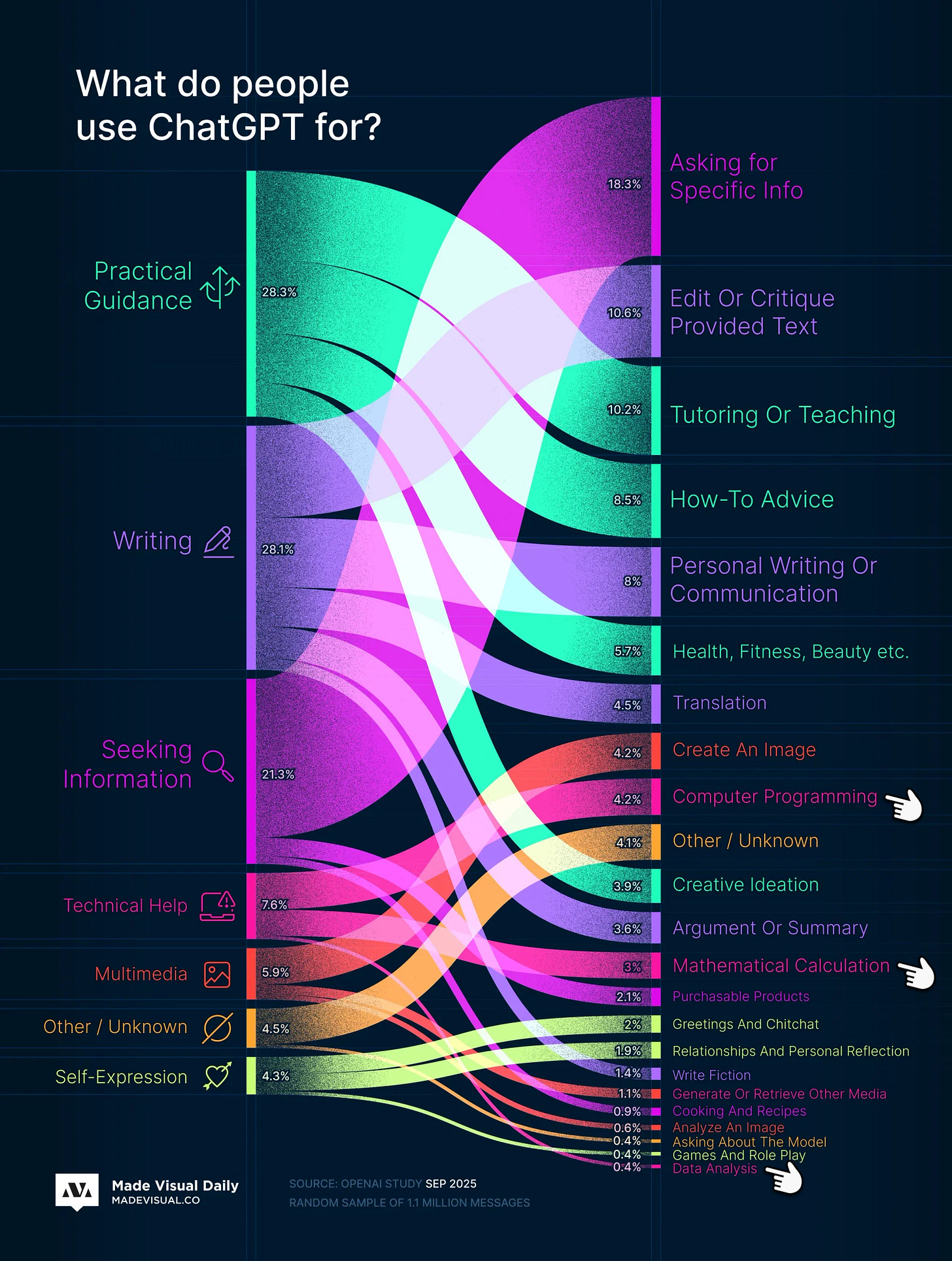

One must separate the propaganda ‘chaff’ from the ROI ‘wheat’.Currently—fact-based reviews of what is actually done with AI does not support extreme developers’ replacement; for example—

Adapted from here Note the pointing fingers and ask:

Does AI replace so much of software development efforts?

If it does—can AI proponents and enthusiasts suggest AI-generated code libraries, fully developed modules or systems, or similar “threatening” gains?

Seems the interest in “AI” with software development had declined between ‘24 and ‘25:

Honest records show what any software person seasoned with automation knows: Development automation does not necessarily hasten delivery; often, it doesn’t make the process or the deliverables better, either…

In Google’s case—”…around 30% of code now uses AI-generated suggestions or whatever. But the most important metric […] is how much has our engineering velocity increased as a company due to AI […] and our estimates are that number is now at 10%.”

I believe Google’s report suggests a rate much higher than most other firms’ in the industry…17

Indeed, as with every technological revolution, we can see two ‘paradigms’:

The contemporary, progressive approach, that fully embrace AI-generated solutions and focus on higher-level problem-solving.

Here we will find those experimenting with tools, building and destroying stuff, “playing” around and adapting their language, skills, and processes to anything that will show value.The other side is more “conservative”, believing deep technical knowledge and hands-on coding remain essential no matter what.

In both cases I believe professional software developers will be the brains taking the firms forward: They will either progress and learn and utilize new tools and options to better their domains, or will strengthen their firms and adapt them to evolving technologies.

So…Am I safe…?

Some firms will use “AI” as the current excuse18 to “refresh” their resources.

Managers, who will be forced to demonstrate “AI” ROI will have to show “redundancies”.

That’s not new, it’s just another “justification”.

Not many firms can compare themselves to the Japanese work culture and its manpower handling in the face of change: Robotics had replaced many line workers, and these were trained to efficiently and effectively operate the new machines, maintain (and fix) them, perform quality assurance tasks, develop, progress, and upgrade the new systems.

If you are in a position where you can influence the process—do!

Ask yourself whether you are the one who knows and uses tools or the one who knows business.

If you think you are below this ‘imaginary’ separating line, or if you feel the wind of change—but not the magic of the moment19—take action!

Inspect, adapt, and evolve.

~~~~~~~📇~~~~~~~

So...they say AI is going to replace you...

Conclusion [?]

See also—

I explicitly exclude those, who exercise programming as a hobby; for them—any advance in their respective areas of interest is welcome, a basis for learning and progress, regardless of “what people say”.

They will embrace AI as a toddler might hug a small plushy or chase around a lizard.

They experiment and ‘play’ and enjoy and have fun.

Unlike the rest of us, who do this…for…living…

The discussion here will, by all means, aim at all and any software applications, both contemporary and existing ones.

Too often, media hype aims at “high-tech” firms and present-day technologies; this is a typical “looking under the lamppost” behaviour.

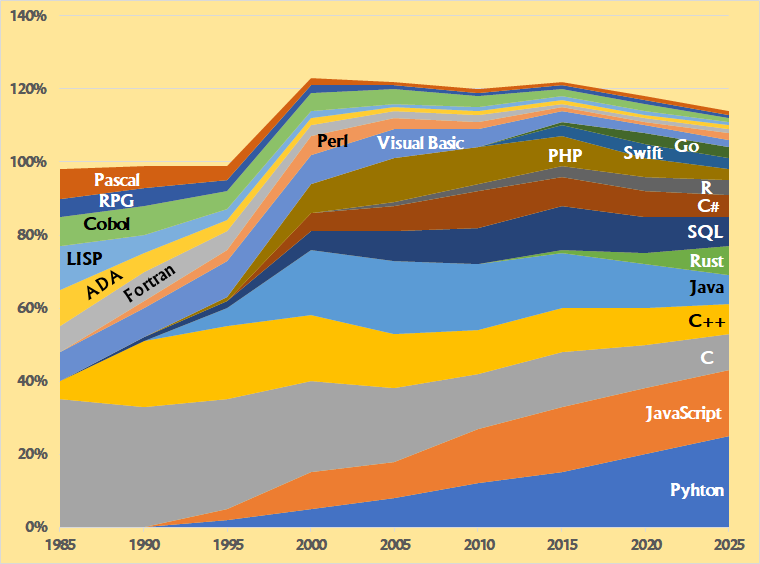

Whereas a thorough review of programming languages and technologies is much too broad for the current discussion, one might consider trends in the evolution of this interesting domain; for example, the distribution of programming languages in the last four decades:

There’s a ‘common’ differentiation between Junior as the one who knows and uses tools and the Senior—who knows business.

We’ll get to this towards the end of the post.

It used to be a ‘joke’ when one said, “My little kid can program this…”—but kids have been accessing computers and creating applications for years now…

I met an engineer in a production plant, whose teenage son had created a MS Access application to allow his father managing his large inventory of taps and faucets.

Before kids created such applications on their home PCs, before there were home PCs, you could have meet them in their parents’ offices, working on mainframe computers (lucky bastards…!) or mid-range minicomputers (do you remember DEC, RIP?).

It used to be a ‘joke’ when one said, “My grandmother can program this…”—but elders did manage to master computer systems and make good use of them…

I fondly remember that elder dentist who frequented our Apple office: He had a Macintosh Plus, on which he composed music. That patient man would swap floppy disks dozens of times before he could make any notable progress with his music, and yet—he did.

Clipper and dBase were popular examples.

At the time, Apple platforms had offered better tools and options, yet—lost in time and memory…

Tools like Microsoft Access and early versions of Visual Basic became the standard for many internal business apps.

I met this in interviews: Candidates were unfamiliar with basic Computer Science concepts (from data structures to algorithms, analysis, complexity…).

To my astonishment—even academic graduates had openly admitted to no actual practical implementation of their expensive Computer Science education!

Such packages were available on mainframe computers—mathematical, statistical, graphic, and many other packages.

Such structures are not foreign to any developer in today’s programming landscape, and are [practically] taken for granted…

Many programmers admit [and sometimes—take pride (!) in] copying code snippets, modules, templates, or complete solutions from various sources (“I just Google this…”).

Is this a “bad thing”?

I don’t think so…

There’s a little “…but…” here, but…

I had the privilege to have encountered several variants of the Traveling Salesman problem. I ‘solved’ it while in my undergraduate studies, and then—referred to ‘known’ solutions.

In one case, I have received an IBM MF tape in an envelope, with a couple of pages hand-typed by German programmers in mid-20th century…

And I have also witnessed that product, that had faced a certain variant of a known allocation family of OR problems; I have seen many (many!) well-developed solutions and algorithms to work this out.

But developers had worked on this problem for [almost] a decade, had lost any clue of algorithm or structure in their solution.

It took a professional advisor almost a year to try and “reengineer” the module and understand what was going in there.

To my knowledge—this module had remained a very black box, unverifiable, unvalidatable…

“Access is going to be THE data-base application for anyone” said Microsoft when they had no decent database management solution.

While others had excellent ones, Access was a very poor alternative, not even a ‘competition’.

Sure enough—when Microsoft had (finally) introduced MS SQL—they immediately turned against Access, trashing it…

I can still hear with difficulty in one ear as of the trumpets and drum rolls of Y2K…

More on this—in the last post in this short series.

Anyone involved with contemporary DevOps would admit, that automation and “intelligent” workflows had elevated work quality, lowered risks, reduced problems and mishaps, and had allowed development teams more time to focus on value delivery.

I, personally, ignore all and any ‘precent’ values I see in [most] popular articles and social media discussions: Without actual data, measurements, and comparison criteria—these are empty claims (not to say—bullshit).

% work reduction may be discussed after the fact.

Let’s wait a year or so, shall we…?

The Sublime, 13.9.25

The reason to [just] 10% will be also discussed on the next post…😊

Note—no quotation marks.

Pause to listen and sing along…