So...they say AI is going to replace you...

Software Test and Quality Assurance Engineers

My “short” answer…

When I hear people seriously considering the replacement of Software Quality Assurance Engineers with “AI”—my initial response would be something like—

A necessary clarification

The terms ”Software Test Engineer” and ”Quality Assurance Engineer” are often used interchangeably; however, these are different roles with distinct responsibilities, mindsets, and scopes of work—especially in mature software development environments.

Software Testers are primarily focused on finding bugs by executing manual or automated test cases.

Their operations are reactive, as they are typically involved after development is complete, and are [more] concerned with software validation—ensuring the product behaves as expected under various conditions (i.e.—follow predefined Test Scripts).Testers are perceived as executing manual Test Cases, reporting bugs, and performing regression, smoke, or sanity testing.

Testers may require minimal coding (if any) but will be expected to present strong attention to detail and domain knowledge.

QA Engineers are proactively involved with an entire Software Development Lifecycle (SDLC), from requirements gathering through deployment, aiming at preventing defects—not just finding them.

QA Engineers aim at improving processes: They have to ensure that quality is built into the product ab initio, inspecting requirements, design, and usability throughout SDLC. This charter includes—Design of test strategies and frameworks.

Creation and maintenance of automated test suites, and CI/CD pipeline checks implementation. For this purpose, they will often be required to have some strong programming or scripting skills, and familiarity with automation tools, version control, and DevOps practices.

Requirements refinement through collaboration with developers and Product Managers.

Definition, maintenance, and analysis of quality metrics.

Advocating quality standards and best practices across product teams through taking a strategic, process-oriented approach.

The “Software Tester” role (as defined above) had ‘eroded’ quite a while ago; automation tools, DevOps practices, and more ‘evolved’ software development approaches [and attitudes] had offered good mechanisms to perform such tasks better, faster, and more reliably, making ‘Quality’ a team effort and value rather than a burden carried by “testers.”1

AI might further enhance this direction.

Hence, we will focus on Software QA Engineers.

A little wider perspective, if I may…

For [at least] the past half century, software development challenges grew more complicated: Requirements, use cases, application domains, and practical implementations had raised complexity2 on [almost] any production parameter.

Technological advances had not alleviated process pains, although a wide array of approaches, techniques, and frameworks was devised to better streamline and improve SDLC.

The Manifesto for Agile Software Development was meant to address limitations and challenges of traditional development methods applied up to the 1990s; these were characterized by rigidity, heavy documentation, and inflexibility in meeting varying, unstable, or not well-defined client requirements.

Additionally, the software industry had experienced rapid growth and quickly evolving technological opportunities, as well as a growing pressure to deliver value quickly; this aspect has not slowed down since.

Under these circumstances, Software Engineering could not “behave” like an “engineering” domain: In its unfolding dynamic reality, full initial specifications, early commitment to requirements, and adherence to separate, critical phases had rendered practitioners inflexible to change during the process.

Software development turned out to be expensive and slow, and often failed to achieve clients’ goals on time and at a satisfactory level.

Failure rates with IT industry speak for themselves:

~70% of Software Development and IT projects fail, mainly due to requirements (~40%), scope (~30%), and communications issues (~60%).

>85% of Digital Transformation projects fail: ~44% do not meet business requirements, where complexity an insufficient leadership contribute, too.

~75% of government IT projects fail: Complexity underestimation (~35%) and management support (~30%) contribute much to this.

~70% of Business Process Change projects fail, primarily for direction reconsiderations (~60%), organizational culture (~50%), and lack of a clear vision (~30%).

Heralded AI R&D projects fail ~85% due to high complexity, failing to get to production, but mainly—due to unclear (unset?) goals.

The Agile Manifesto stated:

…we have come to value:

Individuals and interactions over processes and tools

Working software over comprehensive documentation3

Customer collaboration over contract negotiation

Responding to change over following a plan

So—the Agile Manifesto valued distancing from those factors, which make the main failure causes…

Can “AI” meet software development’s quality assurance expectations?

Referring to the QA Engineer’s role above, its main professional objectives [which are unique, unshareable with other domains] would be—

Design of test strategies and frameworks.

This objective presents the basic “accountancy” problem of the cost of validation vs. the cost of errors through SDLC: How much effort (i.e.—$) would the organization be willing [or able] to put into delivery validation and verification against the cost of defects, functional deviations, customer dissatisfactions, etc.This task relies on individuals, interactions, and collaboration in the ever-evolving organization, with its internal and external interfaces4; it cannot possibly work only with prescribed processes5, tools, or comprehensive documentation.

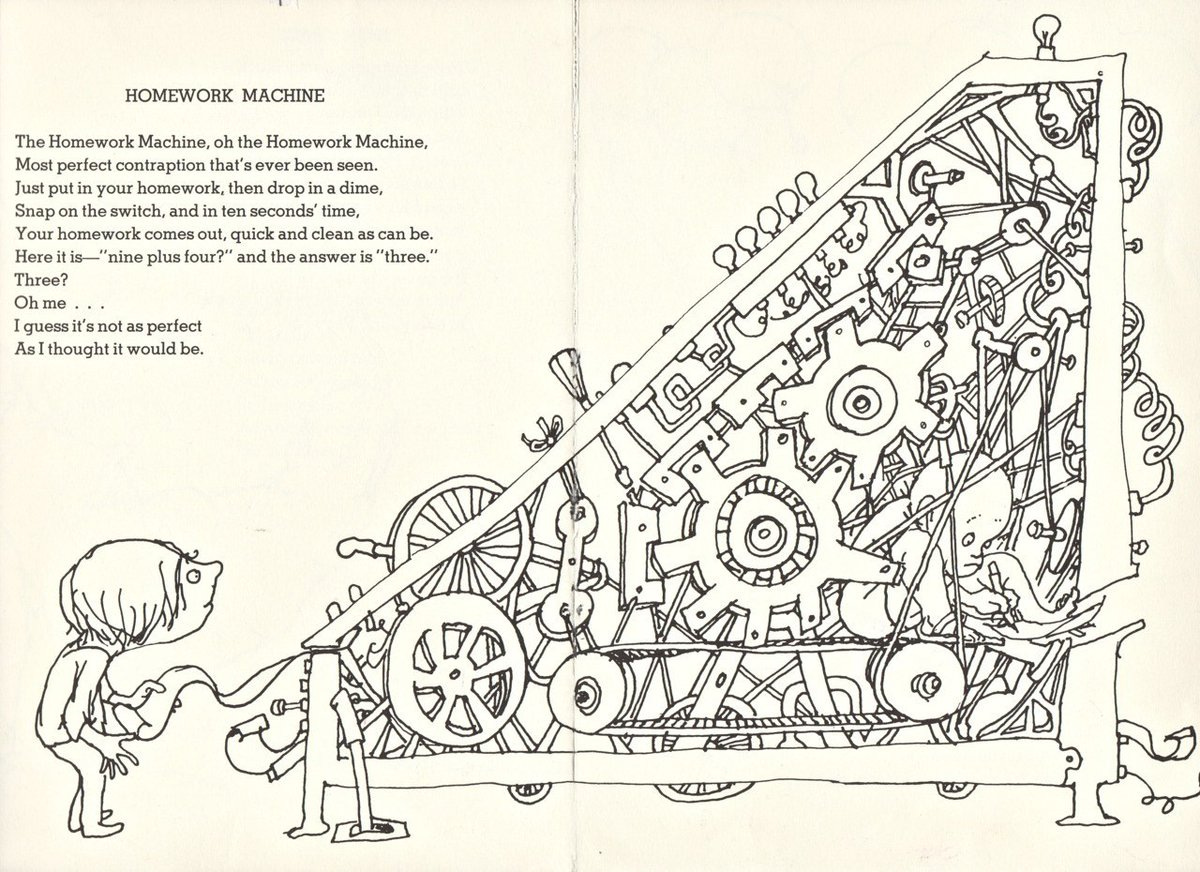

Now—if teams will have to compile bodies of information to support and enable LLMs in order to provide any value in this respect—wouldn’t we get back to the same point, where we cannot create, maintain, and sustain such content…?Requirements refinement by collaboration with developers and Product Managers.

If comprehensive documentation and contract negotiation are less valued—what can the QA engineer work with?

If following a plan is less valued—how would “requirements refinement” be?

Why would we think that AI/LLM can cope with undocumented, vague, or oral-tradition synonyms, inflections, and writing variations, where team members cannot sustain them over time?

If we aim at individuals and interactions over comprehensive documentation, contract negotiation, and following a plan—do AI barons expect teams to work for the mechanism Moloch6?Definition, maintenance, and analysis of quality metrics.

All these translate into documentation and a collaborative quality view.

Metrics should be ‘alive,’ agile, evolving, and often—this will be accomplished through human interaction rather than formal communications.

Release criteria might include a variety of parameters, indicators, depndency and compliance considerations; some might require ‘manual’ intervention by a broad understanding of requirements [from multiple origins], contexts, and related impact and implications7.

Here we will also find proliferating BI8 practices: For natural language in BI tools to work, someone in the organization has to organize all database fields, write a dictionary9, and define the terms so they’re consistent for everyone.What happens is, that instead of making work easier, the whole natural language story just complicates everything: Instead of interacting with the tool about the data—we are talking about how to talk to it about the data.

Advocating quality standards and best practices across product teams through taking a strategic, process-oriented approach.

Process, you say…?

Whereas AI/LLM may assist with digestion of large content and providing some assistance with data processing, the real question is this:

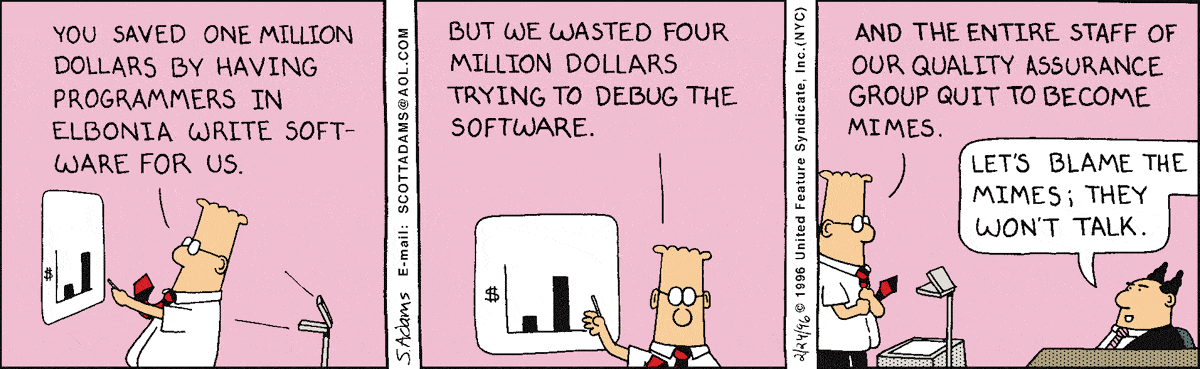

For over half a century, the IT industry doesn’t do exceptionally well with software quality10. Does anybody think that this industry can improve its performance using yet another software product, which is neither validatable nor verifiable?

Can software QA engineers be replaced with AI?

They may be assisted by it.

They may include it in processes.

They will have to validate AI-generated content.

They will have to verify AI-generated responses.

And, sadly, the most important aspect would always be—

They will take the blame for quality issues, as no one can point a finger at a machine or dare doubting that technology, which is hyped as the pinnacle of human accomplishments.

A strategic perspective

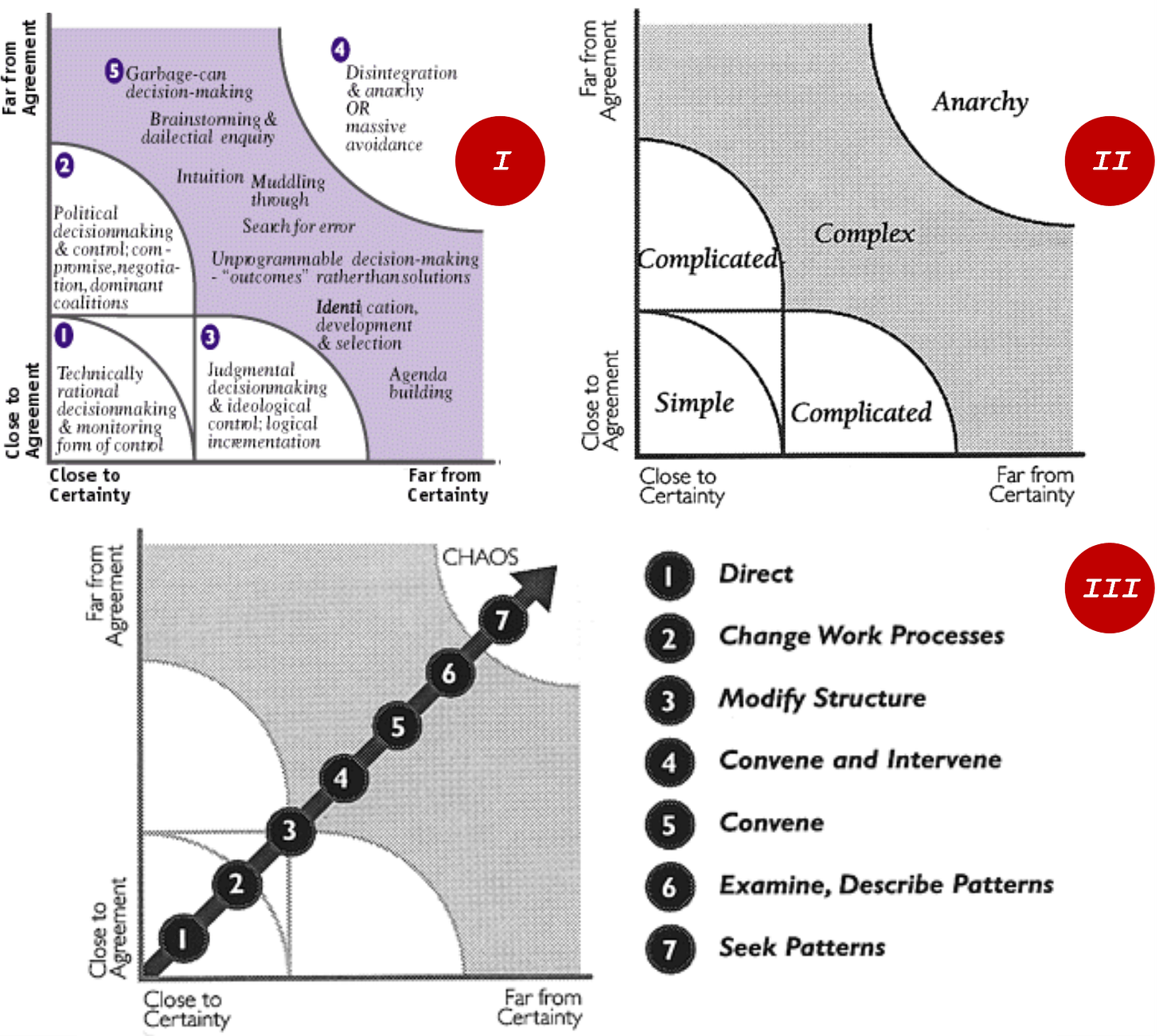

A good perspective to assess QA Engineers’ role with a product may be using Ralph D. Stacey’s Agreement & Certainty Matrix.

The matrix maps management actions in a complex adaptive system by the degree of certainty and level of agreement on an issue at hand.

QA Engineers behavior is different in any of the regions:

In simple cases—there’s some sense to lean towards ‘traditional’ methods.

The QA Engineer effort is ‘simple’, too: Requirements and definitions are fixed, no much risk with technology, monitoring is straightforward…

They can direct the teams on testing and quality, and be required to review work processes only occasionally [if at all].

What could possibly go wrong?Complicated cases can be of two types:

There’s less agreement or alignment amongst stakeholders.

Such cases highlight the necessity to manage individuals and interactions over processes and tools, the difficulty of establishing comprehensive documentation, the need for customer collaboration before getting to contract negotiation, and the frequent response to change rather than following a plan.

As this scenario involves more interaction, ‘politics’, negotiation skills etc., QA Engineers should be involved through the process and ware of changes, advancements, agreements, disagreements, expectations, and implied propsects.

No AI mechanism will be able to replace them there.

Often—AIing such cases will only make things worse.There are doubts or risks associated with uncertainties, e.g.—technology, investment, market, competition, etc.

Such conditions require QA Engineers to prepare for—Changing strategies and frameworks,

Requirements refinement through collaboration with developers and Product Managers,

Inspection and adaptation of quality metrics,

And—an adaptive approach to advocating quality standards and best practices (which might change through getting more clarity).

This multifaceted effort is seldom ‘templatable’ (if at all).

AI is, in this respect, yet another computational mechanism to support data and information processing.

Not a replacement.

Complex cases include those “things”, which are hard to measure, count, enumerate, formulate, or template.

AI can assist in some tasks, such as describing patterns. But then—we’re just talking about a statistical power-tool, not a functional replacement.The Anarchy level is where no one wants to be.

Everyone wants to avoid.

Nothing really tells you what to do, advises you how to behave.

It’s the time for motivational quotes, prayers, and leadership skills.

Who would you trust to throw the dice…?

﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌﹌

In my opinion, QA Engineers—as defined above—will not only retain their positions: They will be required even more!

What makes QA Engineers valuable?

Their skill-sets, multidisciplinary capabilities, and proactive practices will make them precious resources in any complex scenario, and even more so—where AI components will be applied. Such introduce new levels of risks, uncertainty, and delivery challenges, which require new and enhanced systems analysis and inspection means.Will they be fired “because of AI”?

I assume they might be, as many other employees who are ‘sacrificed’ either to ‘justify’ the cost of AI or to compensate for poor past resourcing decisions11.What could QA Engineers do to keep and raise their value?

Avoid drifting down, back, to being a “tester”.

Keep up with technological advancements in general, and AI—in particular.

One must understand capabilities, options, and risks (!) to provide a good advice and perform better. In anything.

~~~~~~~📇~~~~~~~

So...they say AI is going to replace you...

Software Testers ⛳

Conclusion [?]

Post factum testing often renders testers a nuisance.

When they are not involved with SDLC—product teams regard them as ‘outsiders’, pesky individuals.

Everybody suffers.

Complicated problems can be solved using known, established rules, algorithms, or structured processes, but complex problems, characterized by uncertainty and numerous interrelated factors, cannot be resolved through predefined methods.

Too often, people tend to adopt complicated thinking where they should have, actually, consciously managed complexity.

Once, I attended the opening session with the new Agile/Scrum coach.

Before even introducing himself, one development manager turned to him and said:

”Can you, please, tell us about Kanban Scrum?

We heard that this approach requires less documentation than…what we do now…”

And I looked at him discombobulatedly: As you lead things now—you practically document nothing, communicate poorly, and withhold any information transfer or sharing.

Could you possibly do less than that…‽

Consider The True Picture of an Organization by the Rummler-Brache Group.

“If thou hast run with the footmen, and they have wearied thee, then how canst thou contend with horses?” (Jeremiah 12:5)

If IT industry had failed—and devised means to bypass or otherwise lessen—documentation when there were good justifications for it, internal and external stakeholders interested in it, why would one expect the same organization, who is filled with new-age, less-documentary oriented resources, to put much effort in doing so for a…machine…?

Such decisions might be taken in formal or informal meetings, discussions, or exchanges, by agreed-upon tasks, yielding possible modifications or commitements.

BI market grew from ~$10.5B in the 2010s to ~$38B in 2025, expected to reach $56B in 2030.

There was a case where a programmer had to apply certain object-naming functionality.

The programmer was in a haste, so he “reused” several ‘unused’ fields with some complementary code magic. This wizardry was not documented, of course.

┉┉┉┉┉┉┉┉┉┉┉┉┉

Years later, someone tried to understand that object-naming functionality.

Nothing made any sense. It looked like nothing similar anywhere.

┉┉┉┉┉┉┉┉┉┉┉┉┉

It took several hours for the old programmer, now manager, to dig into his own doings, after which he was able to provide some general explanation and ‘advice’ on how it [possibly] works…

In 2002, the National Institute of Standards and Technology (NIST) published a landmark study, estimating that software bugs cost the U.S. economy $59.5 billion per year in direct and indirect losses (time and money spent identifying, fixing, and recovering from bugs, lost productivity, litigation, customer compensation, reputational damage…); about one-third of these costs could have been avoided with better testing and early intervention.

In 2022 it was reported, that poor software quality cost U.S. organizations $2.41 trillion—a massive increase from past decades. As expected—this had accounted for system outages, cyber incidents, failed deployments, operational disruptions, and legal settlements, all exacerbated with the rise of digital business, increased software complexity, and business dependency on tech systems.

More on this—in the next post.